Syntactic Parsing – the content:

In a world where technology is rapidly advancing, language processing has become an essential component of modern computing. Syntactic parsing plays a critical role in this process by enabling computers to analyze natural language and extract valuable information from it. In simple terms, syntactic parsing involves breaking down sentences into their constituent parts, such as nouns, verbs, adjectives, and prepositions.

Syntactic parsing can be seen as the bridge between human language and computer code. It allows machines to understand the grammatical structure of sentences, which is crucial for tasks like text-to-speech conversion, machine translation, and sentiment analysis. As the amount of data generated each day continues to grow exponentially, effective syntactic parsing techniques have become more important than ever before.

Despite its importance in natural language processing (NLP), syntactic parsing remains a challenging task due to the complexity and ambiguity inherent in human language. Researchers continue to develop new algorithms that improve accuracy while reducing computational costs. This article aims to provide an overview of what syntactic parsing is, how it works, and why it matters for those seeking greater freedom through technological advancements.

The Definition

The process of understanding language is a complex one. To interpret the meaning behind words and sentences, we rely on syntactic parsing – a technique used to analyze the grammatical structure of the text. Syntactic parsing involves breaking down a sentence into its constituent parts, identifying the relationships between those parts, and using this information to derive meaning from the sentence.

Syntactic parsing is an essential component of natural language processing (NLP), which seeks to make computers understand human language. NLP has been rapidly growing in popularity due to advancements in technology and increased demand for intelligent systems capable of interacting with humans. As such, syntactic parsing has become increasingly crucial for tasks like machine translation, sentiment analysis, speech recognition, and more.

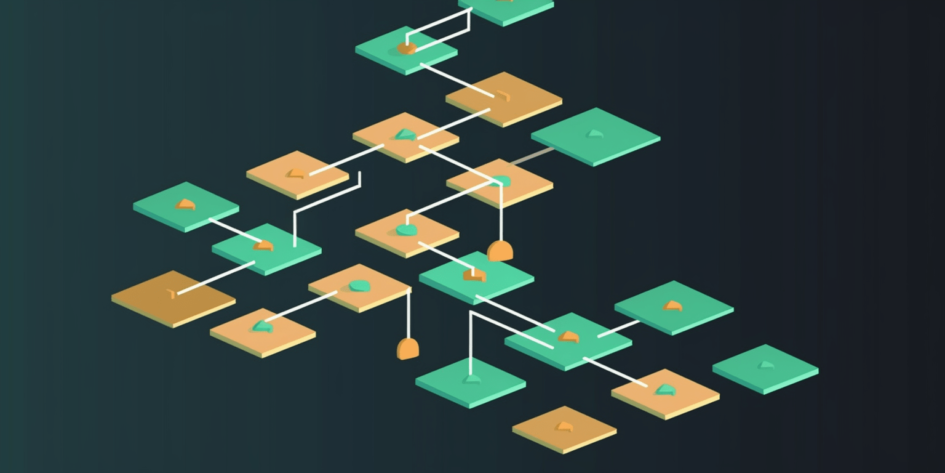

To fully appreciate the significance of syntactic parsing, it’s important first to understand what it entails. The process begins by analyzing individual words in a sentence and determining their part-of-speech tags (e.g., noun, verb). From there, parsers use algorithms to identify dependencies between these words based on grammar rules or statistical models. These dependencies can be visualized as trees that represent the hierarchical structure of the sentence accurately.

Given how central NLP is becoming in our daily lives? think Siri, Alexa? we cannot overemphasize how vital syntactic parsing is. It helps machines “understand” human communication better than ever before; whether you’re asking Siri about tomorrow’s weather forecast or chatting with customer service representatives online, chances are high you’re communicating with artificial intelligence employing some form of syntactic parsing techniques!

Importance Of Syntactic Parsing In Natural Language Processing

The adage “knowledge is power” holds in the field of natural language processing, particularly when it comes to syntactic parsing. Syntactic parsing refers to the process of analyzing a sentence’s structure and understanding its meaning based on grammatical rules. It has become a critical component in various applications such as machine translation, sentiment analysis, and speech recognition.

The importance of syntactic parsing lies in its capability to extract valuable insights from unstructured text data. It allows computers to identify relationships between different parts of a sentence and assign roles to each element within it. This ability enables machines to understand human language more accurately, which is vital for tasks like chatbots or virtual assistants that depend on accurate comprehension.

Furthermore, syntactic parsing plays a significant role in improving the overall efficiency of natural language processing systems by reducing ambiguity and increasing accuracy levels. By breaking down sentences into their constituent parts, machines can easily extract relevant information from large volumes of text data with greater precision.

In conclusion, understanding the importance of syntactic parsing in NLP is essential for developing cutting-edge technologies capable of comprehending human languages effectively. In the next section, we will discuss types of syntactic parsing algorithms that have been developed over time to meet this growing demand for advanced computational linguistics solutions.

Types Of Algorithms

Syntactic parsing is a crucial component of natural language processing, as it involves analyzing the grammatical structure of sentences to extract meaningful information. Various types of syntactic parsing algorithms exist, each with its advantages and disadvantages.

One common type of algorithm is known as constituency-based parsing, which involves breaking down a sentence into its constituent parts (i.e., nouns, verbs, adjectives) and organizing them into hierarchical structures. Another approach is dependency-based parsing, which focuses on identifying how words within a sentence relate to one another through directed links or dependencies.

There are also statistical-based approaches to syntactic parsing, which use machine learning techniques to analyze large sets of annotated data and make predictions based on patterns found in the data. These methods can be particularly effective when dealing with languages that have complex grammar or non-standard syntax.

Regardless of the method used, however, there remain significant challenges in developing accurate syntactic parsers. One major issue is dealing with ambiguity in language; for example, many words can have multiple meanings depending on the context, making it difficult for machines to determine the correct interpretation. Additionally, errors can occur due to incomplete or inconsistent training data or variations in dialects across different regions.

Despite these challenges, continued research and development in syntactic parsing remain vital for advancing our ability to process and understand human language. In the next section about challenges in syntactic parsing, we will explore some of these obstacles in more detail and discuss potential solutions for overcoming them.

Challenges In Syntactic Parsing

Syntactic parsing is a crucial aspect of natural language processing that involves analyzing the structure and grammatical relationships within sentences. Despite its importance, syntactic parsing poses several challenges for researchers attempting to develop accurate algorithms.

Firstly, one of the significant difficulties in syntactic parsing is dealing with the ambiguity present in human language. Many words can have multiple meanings depending on their context, which makes it challenging to determine the correct interpretation for each word in a sentence. Additionally, some sentences may contain complex constructions or idiomatic expressions that are difficult to parse accurately.

Secondly, another challenge faced by developers of syntactic parsers is managing computational complexity. Syntactic parsing requires examining all possible combinations of words and grammar rules to construct an appropriate parse tree for a given sentence. This task becomes increasingly complicated as the length and complexity of sentences increase.

Thirdly, there are issues related to domain-specificity and generalization when developing syntactic parsers. The same sentence may be parsed differently based on the specific domain under consideration (e.g., medical vs. legal). Thus, developing a universal parser capable of handling any text type remains elusive.

Finally, obtaining annotated data sets required for training machine learning models is also an issue in syntactic parsing research. The process of the annotation itself can be time-consuming and expensive since it often involves manual work carried out by experts.

Despite these challenges posed by syntactic parsing, recent advancements in artificial intelligence techniques such as deep neural networks have led to considerable progress in this field. In subsequent sections, we will explore various applications where syntactic parsing has proven useful while highlighting emerging trends and future directions for development.

Applications

Syntactic parsing is a crucial component of natural language processing (NLP) that involves analyzing the grammatical structure of sentences. It serves as the foundation for various applications, including machine translation, information retrieval, and text summarization.

One metaphor to describe syntactic parsing could be comparing it to taking apart a complex jigsaw puzzle. Just like how each piece in a puzzle has its unique place and purpose, every word in a sentence has its part-of-speech tag and relationship with other words. Thus, by breaking down the sentence into smaller components or building blocks, syntactic parsers can identify the syntax tree’s hierarchical structure.

Syntactic parsing plays an essential role in machine translation systems as it helps translate source language sentences precisely into target languages. By understanding the underlying grammar of sentences, NLP models can generate more accurate translations that capture nuances in meaning that might otherwise get lost.

Another application is information retrieval where syntactic parsers help extract relevant information from unstructured data sources such as web pages or social media posts. Parsing techniques enable developers to build search engines that return results based on relevance rather than just keyword matching.

In summary, Syntactic parsing is vital in many NLP applications because it enables machines to understand human language better. With this knowledge comes freedom- freedom from relying solely on literal translations, enabling us to make sense of vast amounts of unstructured data quickly. As technology continues to advance at breakneck speed, we are sure to see even more exciting developments arising from these fundamental parsing techniques.

Conclusion

Syntactic parsing is the process of analyzing natural language sentences into their constituent parts to understand their structure, meaning, and grammatical rules. This task plays a crucial role in Natural Language Processing (NLP) as it helps computers comprehend human language and facilitate communication between humans and machines.

Syntactic parsing algorithms can be broadly classified into two categories: constituency-based and dependency-based parsers. While both types have their advantages and disadvantages, they are equally important for various NLP applications such as sentiment analysis, machine translation, speech recognition, and text summarization.

Despite advancements in syntactic parsing technology, challenges still exist due to ambiguities that arise from homophones, idiomatic expressions, or incomplete sentences. However, researchers continue to develop more robust models that rely on neural networks or deep learning techniques to overcome these limitations.

In conclusion, Syntactic Parsing is an essential component of Natural Language Processing that enables machines to interpret human language accurately. Its importance cannot be overstated given its impact on numerous applications within the field of Artificial Intelligence. To illustrate this point further consider the metaphor of a traveler trying to navigate without a map. Just like a map guides travelers through unknown lands by providing directions and landmarks along the way; syntactic parsing provides computers with linguistic maps to guide them through complex grammar structures enabling them to communicate effectively with humans.

Frequently Asked Questions

What Programming Languages Are Commonly Used For Syntactic Parsing?

Syntactic parsing is a process that involves the analysis of natural language sentences to determine their grammatical structure. The task can be achieved using different techniques, including rule-based and machine-learning approaches. However, for syntactic parsing to take place, programming languages are necessary tools.

Some commonly used programming languages for syntactic parsing include Python, Java, C++, and Perl. These languages offer libraries and frameworks that help in developing algorithms for analyzing sentence structures. For instance, Stanford Parser is an open-source tool written in Java that implements probabilistic context-free grammar (PCFG) models for English language parsing. Similarly, the NLTK library in Python offers various modules such as Treebank Corpus and Dependency Grammars that assist developers in creating parsers.

Although there are several programming languages used for syntactic parsing, each has its strengths and limitations depending on the application requirements. While Python may be more accessible due to its easy-to-learn syntax, it may not be suitable when dealing with large datasets requiring high computational power. On the other hand, Java can handle complex tasks but may have a longer development time due to its verbosity.

In conclusion, effective syntactic parsing requires knowledge of programming languages that offer appropriate libraries or frameworks for building parsers. Understanding the strengths and weaknesses of different programming languages helps developers choose the best approach for their specific needs. Ultimately, the successful implementation of syntactic parsers enhances natural language processing capabilities across many fields like artificial intelligence and linguistics research.

How Does Syntactic Parsing Differ From Semantic Parsing?

Syntactic parsing is a process that involves analyzing the grammatical structure of a sentence. It aims to understand the role of each word in a sentence and how they relate to each other syntactically. On the other hand, semantic parsing focuses on understanding the meaning behind words and phrases in a text. While both processes involve natural language processing (NLP), they differ in their objectives.

Syntactic parsing is concerned with identifying parts of speech, such as nouns, verbs, adjectives, and adverbs, and determining how they are connected through phrases and clauses. This process helps in detecting errors like subject-verb agreement or misplaced modifiers which can hinder effective communication. In contrast, semantic parsing seeks to find meaning beyond syntax by taking into account context and world knowledge.

The difference between these two approaches lies in their respective goals: while syntactic analysis identifies relationships among words based on grammar rules, the semantic analysis attempts to capture deeper meaning by considering broader contexts. For example, ‘the cat sat on the mat’ would be parsed differently depending on whether it was describing an animal’s behavior or discussing furniture placement.

In conclusion, while syntactic and semantic parsing share similarities to NLP techniques used for language processing tasks; they differ significantly in scope and purpose. Syntactic analysis tries to decode the underlying structure of sentences using grammar rules whereas semantic analysis goes beyond this boundary by attempting to determine what people mean when they use particular words together within specific contextual frameworks.

Can Syntactic Parsing Be Used For Non-English Languages?

Syntactic parsing is the process of analyzing natural language sentences based on their grammar and syntax. This approach provides a structured representation of the sentence by identifying its constituent parts, such as nouns, verbs, adjectives, etc., and how they relate to each other in terms of their grammatical roles. While syntactic parsing has been primarily used for English language processing, it can also be applied to non-English languages.

According to recent studies, many researchers have successfully implemented syntactic parsers for languages like Chinese, Arabic, French, German, Hindi, and Japanese among others. However, unlike English where there are widely available standardized resources that make development easier when building parsers; developing parsers for non-English languages requires more extensive linguistic knowledge and specialized tools. Some languages present unique challenges that require customized approaches during implementation.

Despite these challenges though, research shows that using syntactic parsing for non-English languages has several benefits including improving machine translation systems by providing more accurate translations with better word order choices or helping information extraction tasks like named entity recognition (NER). Therefore this area of research presents ample opportunities for further exploration especially given that over 60% of internet users speak a language other than English.

In summary, while syntactic parsing has traditionally been utilized mostly in English language processing applications due to the availability of standard resources; there is growing evidence indicating its potential applicability to other languages too. Although implementing it in non-English languages presents numerous challenges that need addressing through tailored solutions; incorporating syntactic parsing into NLP pipelines targeting multiple languages could lead to improved accuracy and efficiency in various application domains.

How Accurate Are Syntactic Parsing Algorithms?

Syntactic parsing is a process of analyzing a sentence’s structure to establish the relationship between words. It has been used for several purposes, including natural language processing and machine translation. However, how accurate are syntactic parsing algorithms?

Syntactic parsing accuracy mainly depends on the quality of data and models used in training the algorithm. The more comprehensive and diverse the dataset, the better the model can learn from it. Moreover, incorporating contextual knowledge into these models can significantly improve their performance.

Several studies have shown that current syntactic parsing algorithms achieve high accuracies ranging from 85% to over 90%. However, certain factors such as sentence length or complexity may decrease this accuracy rate. For example, longer sentences with multiple clauses tend to be more challenging to parse accurately than shorter sentences with simpler structures.

Despite its high accuracy rates, there is still room for improvement in syntactic parsing algorithms. A hyperbole could state that “the sky’s not even close to being the limit” when it comes to developing new models and techniques for improving these systems’ accuracy levels.

As technologies continue advancing towards greater autonomy and freedom of expression, further research will undoubtedly lead us towards ever-more sophisticated approaches capable of understanding human language at an unprecedented level.

Are There Any Ethical Concerns Surrounding The Use Of Syntactic Parsing In Natural Language Processing?

Metaphorically speaking, syntactic parsing is like a surgeon meticulously dissecting and analyzing the structure of human language. It involves breaking down sentences into their constituent parts (e.g., nouns, verbs, adjectives) to understand how they relate to each other and create meaning. While this process has revolutionized natural language processing by enabling machines to comprehend text with greater accuracy, it also raises ethical concerns that cannot be ignored.

Firstly, there are potential biases in training data sets used for developing syntactic parsing algorithms. These biases can result in inaccurate or discriminatory analyses of texts, which can have far-reaching consequences on individuals or groups. Secondly, some argue that relying too heavily on automated processes can lead to a loss of human skills such as critical thinking and creativity. Lastly, there is the issue of privacy – as more personal information is shared online, the use of syntactic parsing could become invasive if not regulated properly.

Do you have an interesting AI tool that you want to showcase?

Get your tool published in our AI Tools Directory, and get found by thousands of people every month.

List your tool now!